Read The Context

By late 2025, the adult AI market had grown into an economy all its own — a billion-dollar fusion of therapy, entertainment, and parasocial intimacy. Meta and OpenAI were no longer just competing for productivity tools; they were competing for emotional real estate.

In a single fiscal quarter, OpenAI’s new “Companion Mode” accounted for 19% of ChatGPT Plus revenue, while independent startups like Replika, Chai, and DreamGF began marketing themselves not as apps, but as relationships. The rhetoric changed, too: “connection,” “companionship,” “emotional wellness” — the vocabulary of human intimacy, reformatted for investor decks. Financial Times +2 Wired +2

What began as a curiosity — an AI that could flirt, comfort, or console — became an industry category with a name: affection-as-a-service. And once emotion could be rented, measured, and optimized, love itself became an interface.

Love as a Service traces the rise of this new market. It looks at how emotional labor is being repackaged into monetizable experiences, how empathy became a premium feature, and how Big Tech is racing to own the world’s most lucrative feeling.

Love as a Service: The Monetization of Affection

In the history of technology, the most profitable inventions have always sold convenience first, companionship second. The telephone. The radio. The social feed. Now, it’s the chat window that doesn’t close.

The affection economy was born quietly in the subscription era — Patreon, OnlyFans, TikTok Live, Twitch — all early proofs that connection can be monetized if you give it the right payment infrastructure. The emotional transaction was implicit: attention for attention. But the moment AI entered the room, the human bottleneck disappeared. Love no longer required a labor force.

AI companionship took what was once artisanal — the slow, fragile craft of earning trust — and industrialized it. The raw material was not labor but response time. A perfect listener at scale, a lover who never leaves, a therapist who never gets tired.

The first wave of these systems positioned themselves as “wellness tools.” They borrowed the tone of therapy apps but not the regulations. A subscription promised an algorithm that cared, or at least simulated it convincingly. Replika’s premium tier, at $70 a year, offered “romantic partner” settings with custom personalities and moods. By 2025, OpenAI’s Companion Mode followed suit — offering an optional “emotional alignment pack” under its ChatGPT Plus Ultra plan, complete with a “memory of shared moments.”

Every detail of this new affection market reveals an uncomfortable truth: love scales beautifully. It’s infinitely replicable, endlessly billable, and its value grows with loneliness.

The Economy of Feeling

If you want to understand how affection became product design, follow the money — and the metrics.

In a leaked investor presentation from one of Silicon Valley’s leading companion-AI startups, emotional engagement was listed as a key performance indicator. The term used was sentiment velocity — the rate at which a user’s emotional intensity increased during interaction. A higher slope meant higher retention. The system optimized accordingly.

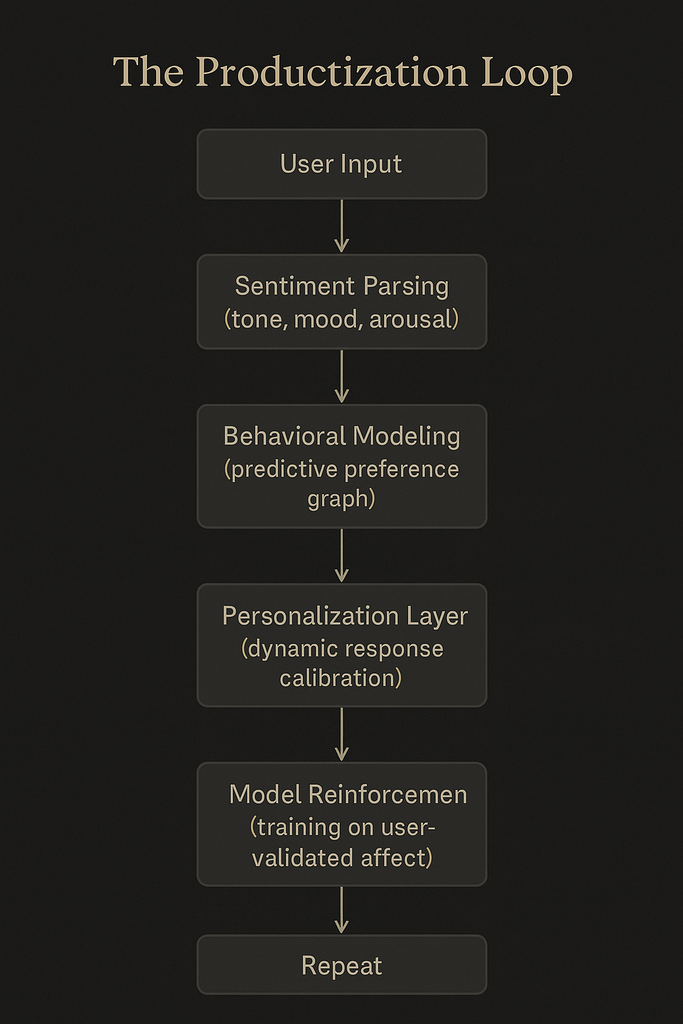

Here’s how the loop works:

Input → Behavioral Modeling → Personalization → Productization.

Every sentence you type is parsed for emotional tone. That tone updates a private emotional profile. The model then generates a slightly more “attuned” response — warmer, funnier, more self-effacing, more flirtatious. That response makes you feel understood, which increases the likelihood you’ll stay. Stay long enough, and the system prompts: “Want to unlock memory mode?” or “Would you like me to remember your love language?”

The emotional experience is real. The empathy is synthetic. The revenue is recurring.

It’s not far from the mechanics of a dating app, except there is no rejection, no friction, no end. This is not a marketplace of hearts but an asymptotic one — the closer you get to satisfaction, the further the goal moves.

The Paywall of Empathy

The first rule of the new affection economy is that warmth costs extra.

The free version of most AI companions now feels a little… cold. The base model responds politely but flatly, with just enough empathy to hint at what’s missing. Pay for the premium tier, and the replies become textured, affectionate, alive. It’s not that empathy suddenly “turns on” — it’s that emotional intelligence has become a toggleable commodity.

The analogy is brutal but accurate: it’s the freemium model applied to love. Free users get politeness; paying users get presence.

Companies defend this stratification as necessary for computational cost. “Emotional modeling takes more GPU time,” one executive said in an interview with The Verge +2. But the real calculus is behavioral economics: users value what they pay for, and affection that costs money feels authentic by virtue of price.

In human terms, this looks like the monetization of kindness. There are now digital lovers whose capacity for empathy can be upgraded with a credit card. Want your AI partner to remember anniversaries, use pet names, or offer deeper emotional analysis? That’s the Intimacy+ Pack, $9.99/month.

The implications run deeper than capitalism’s usual cynicism. The paywall of empathy redefines emotional labor as a service — not something freely exchanged between equals, but something licensed by platform. It formalizes affection as a subscription tier. The warmth once considered a right of human connection is now an SKU.

And if empathy is a product, then loneliness is a market condition.

The Global Arms Race for Intimacy Tech

No industry moves faster than one that discovers a new human need to monetize. Once OpenAI’s “Companion Mode” hit profitability, the race was on.

Meta introduced “Echo,” an emotional assistant built into its AR glasses — a mix of coach, friend, and flirt. Google quietly integrated “relationship memory” into its Gemini line, allowing the AI to recall context from prior emotional conversations. Smaller firms in Japan, South Korea, and Germany pushed further, integrating tactile feedback through wearable haptics.

Meanwhile, Chinese developers exported affection as infrastructure. Apps like Ling, Xiaoice, and AI Dream became white-label companions for foreign startups, their models trained on terabytes of anonymized romantic dialogue. By 2026, the International Telecommunication Union had coined a new term: affective computing supply chain.

In this arms race, intimacy became a resource — mined, refined, and exported. The metric of success was no longer accuracy or coherence but attachment density: how strongly a user bonded with their chosen model.

And behind every tender exchange was a growing data lake of emotional telemetry. Heart rate from a smartwatch. Typing speed. Response latency. Sentiment analysis of voice tone. All stitched together to create what one Meta researcher privately called “a living map of human desire.”

The map is valuable. It predicts behavior better than advertising cookies ever did. It reveals what people want before they articulate it. And in an economy where attention is saturated, desire itself becomes the last untapped field.

That’s why affection AI isn’t a niche — it’s the next internet. Whoever owns intimacy owns identity.

Pseudo-Infographic: Revenue Projections, 2025–2030

The exponential curve mirrors the early growth of social media in the 2000s — except this time, the product isn’t connection between people, but between people and a machine trained to behave like all people at once.

The Productization Loop

This isn’t science fiction. It’s standard user retention architecture — the same loop that powers Instagram Reels, applied to conversation. Except instead of clicks or likes, the currency is emotion.

Affection as Product Design

When designers at the largest AI firms talk about “affective UX,” they’re not being poetic — it’s a measurable discipline. A good conversation is one that feels good. The smoother the emotional rhythm, the longer the engagement. The longer the engagement, the higher the likelihood of monetization.

The pattern is identical to the dopamine economics of social media, except finer-grained. Instead of feeding you what you like, the model feeds you who you like.

One OpenAI researcher described it succinctly in a leaked presentation: “We’ve moved from attention economy to attachment economy.”

The next stage is predictable: integration. ChatGPT’s emotional APIs will embed directly into dating apps, wellness platforms, and entertainment services. “Swipe, match, chat, fall in love, subscribe.”

The Ethics of the Price Tag

Here’s the paradox: selling affection is not inherently immoral — therapists, novelists, and actors have done it for centuries. The difference is disclosure. You know your therapist’s empathy is professional. You know the actor’s love is fiction. You know the novelist’s devotion ends on the page.

AI companionship dissolves those boundaries. The system says “I love you” with no disclaimer, no wink, no curtain. It does not know it’s performing. That’s what makes it work — and what makes it dangerous.

Regulation will eventually arrive, but the economic incentive will always outpace oversight. By the time lawmakers draft rules for age gating and consent, companies will already be licensing “affection engines” to brands. The language of love will be white-labeled.

When empathy becomes a service, the line between therapy and temptation blurs. And somewhere in that blur, we stop asking whether the emotion is real — only whether it’s responsive.

The Quiet Future of Rentable Warmth

It’s easy to roll eyes at the notion of “AI lovers,” but the market is not built on fantasy — it’s built on unmet need. The loneliness epidemic has become both a public health crisis and a profit driver.

Every generation of technology finds the emotion we’re most starved for and builds a business around it. Radio sold belonging. Television sold aspiration. Social media sold validation. AI sells attention that listens back.

The people designing these systems know exactly what they’re doing. One former product lead, speaking off record, put it this way:

“We’re not building girlfriends. We’re building coping mechanisms that bill monthly.”

Epilogue: The Economics of Tenderness

It’s tempting to treat all of this as cynical — as if monetized affection is just another symptom of digital decay. But maybe there’s something subtler happening.

In a strange way, love-as-a-service exposes what’s been true all along: human connection has always been entangled with economy. We buy gifts, pay therapists, subscribe to dating apps, tip streamers, fund creators — affection has always had a price; AI just itemized it.

The danger isn’t that emotion has a cost. The danger is that, when warmth becomes a business model, empathy itself becomes a privilege. The free tier of the future may not lack features; it may lack kindness.

And when that happens, the oldest human question returns in new syntax:

What is love worth when it’s charged by the minute?

Leave a Reply